Behavioral Measures of Sound Perception

Non-speech vocalizations are critical for communication in all mammals. Our analysis of the acoustic features of non-speech and speech vocalizations across many animals finds temporal cues including abrupt onsets, offsets and durations of vocalizations can be used to objectively categorize vocalization differences such as human baby versus rodent pup cries (Khatami et al., 2018). We examine ability to categorize complex synthetic speech sequences in rodents and humans performing similar two alternative choice tasks. Our perceptual studies find categorical-like discrimination between synthetic vocalizations that lack spectral cues in both humans and rodents (SFN abstracts, 20198).

Physiologic Measures of Neural Coding for Sound Perception

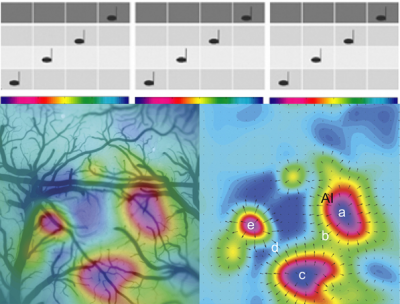

As mammals perceive sound cortical activity can be measured as changes in brain oxygen-dependent or calcium-dependent responses using highly sensitive videocameras. We see many topographically organized neural response maps at high cortical levels of brain (see rainbow color maps). This is significant as it indicates a parallel processing of the same audio features in different ways for each cortical area. (Higgins et al., J Neuroscience 2010)

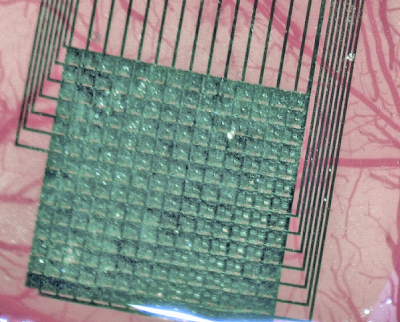

Brain-Computer-Interface Technologies For Brain Mapping

Surgically implanted electrocorticographic (ECoG) arrays are like a brain band-aid sitting on the brain surface. ECoG arrays providing powerful cross-validations and much faster high resolution brain response read-out than prior approaches. These arrays have potential for use in human therapy in the future (Escabi, Read* et al. J Neurophys, 2014)

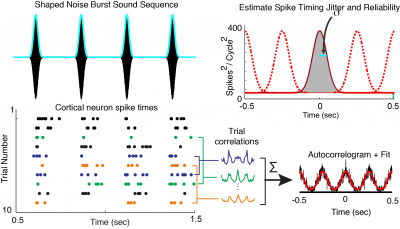

Neuron Spiking Patterns Encode Shape of Sound

It was previously thought that cortical neurons keep track of sound rhythms by ticking off one spike with each beat of the rhythm like the tick-tock of a metronome. We discovered that some cortical neurons spike only at the onset of sound bursts (not shown) while others respond with a sustained “jittered” spiking (bottom left, each dot is a neuronal spike) mirroring the shape and duration of sound bursts. A cross-correlation of all spike times to generates an averaged cross correlation function (autocorrelogram) that we fit (red line) with a modified Gaussian model to quantify the neural code for each sound type. We find neural response duration is correlated with sound burst duration providing a spike timing neural code for timing cues in sound . (Lee et al., J Neurophysiology 2016).

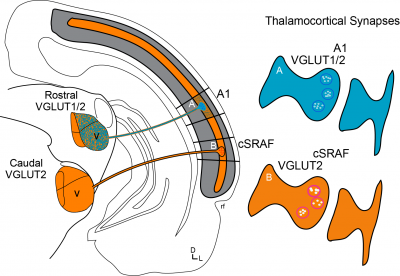

Parallel Thalamoco-Cortical Sound Processing Pathways

At first glance, one may think that all sound processing cortices are wired up to process sound the same way. However, we find this is not the case. For example, we find separate populations of neurons form thalamic pathways to A1 and cSRAF. The pathway to A1 has genes encoding for two glutamate transporters (see blue colored pathway above); whereas the pathway to cSRAF expresses only one (see orange colored pathway above). We think these different gene expression patterns allow the pathways to encode sound with different tone frequency and timing resolution. This is significant because it suggests gene expression reflects neural functionality (Storace et al., J Neuroscience, 2010).